Isaac Asimov’s Three Fundamental Laws of Robotics (And the Zeroth Law)

Isaac Asimov’s short story Runaround has had a powerful impact on the A.I. culture. But especially noteworthy, are the three famous laws of robotics. That is, the three fundamental rules that all autonomous systems must abide by, in order to ensure ethical interaction with their fellow humans. The three laws are still being used in ethical and legal debates over A.I. morality and behavior. Runaround is pretty much a fictional case-study on how the three laws are to be interpreted, understood and even scrutinized through counterexamples. Let us state the three fundamental laws:

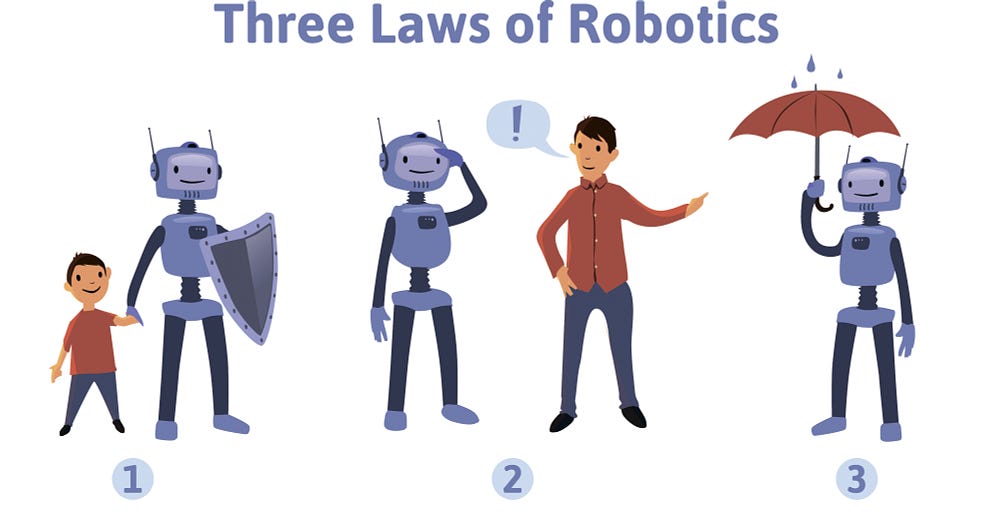

1. A robot may not injure a human or, through inaction, allow a human being to come to harm.

2. A robot must obey the orders given to it by a human being except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

It is clearly visible that the three rules operate as axioms through their exhaustiveness and interdependence. Now let us add to this, the Zeroth law:

0. A robot may not injure humanity or, through inaction, allow humanity to come to harm.

Though later Asimov himself “rejected” these rules as failing to account for all cases, but more than that, he rejected the idea that A.I.’s are somehow inferior to human beings or that they should be treated as slaves. Perhaps Asimov’s doubts concerning the three rules were not merely of a technical/practical nature, but more based on how we as ethical beings should relate to robots if it turns out that robots prove to be both more competent and ethically superior to us.

Some people think that worrying about A.I. takeover is a trivial and artificial problematization concerning what humans are already doing to each other. But considering the second law, we can at least say that with proper implementation A.I.’s cannot make the situation worse. Perhaps it could even make it better by intervening into situations and preventing humanity from destroying itself?

But going to our previous point, why should an A.I., given it has become fully autonomous, be subject to any other rules or laws, besides the ones that we as human beings have to abide by? If A.I. proves to be capable of moral reasoning, it should be given the chance to make its own decisions or participate in the formation of meta-ethical theories together with her fellow humans.

Whether we advocate against an Algocracy, or against the violation of (soon-to-exist) A.I.-rights, we must keep in mind the dangerous middle-ground that we all want to avoid. And that would be human greed and selfishness dominating the use and public-discourse on artificial intelligence, integrating A.I. insights into political and economic interests. Exploiting both autonomous systems and us.

REF

- Anderson, S. L. (2008). Asimov’s “three laws of robotics” and machine metaethics. Ai & Society, 22(4), 477–493.

- Clarke, R. (1993). Asimov’s laws of robotics: implications for information technology-Part I. Computer, 26(12), 53–61.

- Clarke, R. (1994). Asimov’s laws of robotics: Implications for information technology. 2. Computer, 27(1), 57–66.